- +90 216 455-1865

- info@compecta.com

All-In-One & Ready to Use

CompecTA SuperNova Engine™ is a super-fast and energy-efficient server solution for Deep Learning and AI needs.

Built with the world’s best hardware, software, and systems engineering and years of experience of CompecTA HPC Solutions.

It comes with Deep Learning Neural Network libraries, Deep Learning Frameworks, Operating System and Drivers pre-installed and ready to use.

Enterprise Level Support

CompecTA SuperNova Engine™ comes with an Enterprise Level Support package from HPC experts with over 20 years of experience in the field.

We will assist you with any problem you may encounter with your system.

Free CPU Time

CompecTA SuperNova Engine™ comes with 100 thousands hours of CPU Time on CompecTA's in-house HPC Cluster service called FeynmanGrid™.

Use this opportunity to gain experience on using HPC Clusters or performance test any application or to test some new code/application.

CompecTA SuperNova™ Series

Super-fast & Scalable Server Systems

SuperNova Engine™ A30 POPULAR

660 TFLOPS of Tensor Performance

41.2 TFLOPS of FP32 Performance

20.8 TFLOPS of FP64 Performance

1,320 TOPS of INT8 Tensor Performance

Best suited for:

Deep Learning Development

Fast Neural Network Training

Fast Inferencing

prices starting from

$38,995 (Excluding Taxes)

CNVAIS1-A30V1

- 19" 1U Rackmount Chassis 4-way PCIe GPUs

- Dual INTEL Xeon CPUs (up to 64-Cores)

- 512 GB DDR4 3200MHz ECC Memory (up to 2TB)

- 4 x NVIDIA A30 24GB HBM2 Memory @ 933GB/s 165W

- 15,216 Total CUDA® Cores

- 896 Total Tensor Cores

- Up to 16 MIGs (Multi Instance GPUs) at 6GB each

- 2 x 1.92TB NVMe Disks

(up to 4 disks) - Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- Anaconda Data Science Platform

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Infiniband HDR 200Gb/s Network for multi-node GPU Direct RDMA support

SuperNova Engine™ A100 BEST

1,248 TFLOPS of Tensor Performance

78 TFLOPS of FP32 Performance

38.8 TFLOPS of FP64 Performance

2,496 TOPS of INT8 Tensor Performance

Best suited for:

Deep Learning Development

Super-fast Neural Network Training

Super-fast Inferencing

prices starting from

$79,495 (Excluding Taxes)

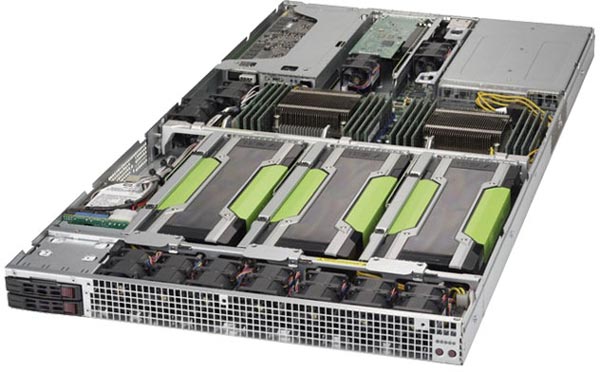

CNVAIS1-A100V2

- 19" 1U Rackmount Chassis 4-way PCIe GPUs

- Dual INTEL Xeon CPUs (up to 64-Cores)

- 1 TB DDR4 3200MHz ECC Memory (up to 2TB)

- 4 x NVIDIA A100 80GB HBM2 Memory @ 1935GB/s 300W

- 27,648 Total CUDA® Cores

- 1,728 Total Tensor Cores

- Up to 28 MIGs (Multi Instance GPUs) at 10GB each

- 2 x 1.92TB NVMe Disks

(up to 4 disks) - Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- Anaconda Data Science Platform

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Infiniband HDR 200Gb/s Network for multi-node GPU Direct RDMA support

SuperNova Engine™ NA104 with NVLINK

1,248 TFLOPS of Tensor Performance

78 TFLOPS of FP32 Performance

38.8 TFLOPS of FP64 Performance

2,496 TOPS of INT8 Tensor Performance

Best suited for:

Super-fast Multi-GPU Workloads

Deep Learning Development

Super-fast Neural Network Training

Super-fast Inferencing

REQUEST A QUOTE

CNVAIS1-NA104V2

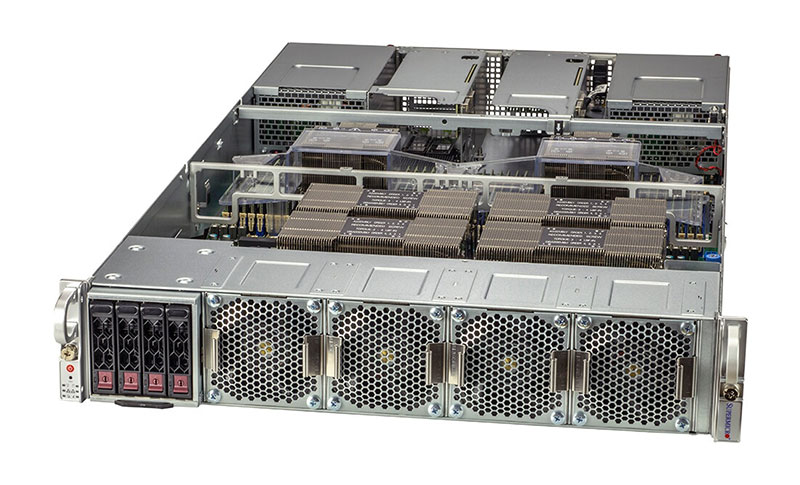

- 19" 2U Rackmount Chassis 4-way NVLINK GPUs

- Dual INTEL Xeon CPUs (up to 80-Cores)

- 1 TB DDR4 3200MHz ECC Memory (up to 4TB)

- HGX A100 4-GPU SXM4 80GB HBM2e NVLINK Multi-GPU Board 2039GB/s 400W

- 27,648 Total CUDA® Cores

- 1,728 Total Tensor Cores

- Up to 28 MIGs (Multi Instance GPUs) at 10GB each

- 2 x 1.92TB SATA SSD Disks

(up to 4 disks) - Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- Anaconda Data Science Platform

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Infiniband HDR 200Gb/s Network for multi-node GPU Direct RDMA support

SuperNova Engine™ NA108 with NVLINK BEST

2,500 TFLOPS of Tensor Performance

156 TFLOPS of FP32 Performance

78 TFLOPS of FP64 Performance

5,000 TOPS of INT8 Tensor Performance

Best suited for:

Super-fast Multi-GPU Workloads

Deep Learning Development

Super-fast Neural Network Training

Super-fast Inferencing

REQUEST A QUOTE

CNVAIS1-NA108V2

- 19" 4U Rackmount Chassis 8-way NVLINK GPUs

- Dual INTEL Xeon CPUs (up to 80-Cores)

- 2 TB DDR4 3200MHz ECC Memory (up to 4TB)

- HGX A100 8-GPU SXM4 80GB HBM2e NVLINK Multi-GPU Board 2039GB/s 400W-500W

- 55,296 Total CUDA® Cores

- 3,456 Total Tensor Cores

- Up to 56 MIGs (Multi Instance GPUs) at 10GB each

- 4 x 3.84TB NVMe Disks

(up to 10 disks) - Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- Anaconda Data Science Platform

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Infiniband HDR 200Gb/s Network for multi-node GPU Direct RDMA support

CompecTA SuperNova™ Series Archived Products

Following products are no longer available to order.

SuperNova Engine™ P100

74.8 TFLOPS of FP16 Performance

37.2 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Super-fast Neural Network Training

Good Inferencing

NOT AVAILABLE

CNVAIS1-P100V1

- 19" 1U Rackmount Chassis 4-way GPGPU

- Intel® Xeon® Processor E5-2697A v4

- 256 GB DDR4 2400MHz Memory

- 4 x NVIDIA Tesla P100 16GB HBM2 at 732 GB/s Memory

- 14,336 Total CUDA® Cores

- 4 x 512GB SSD Disks

(2 hot-swap and 2 fixed) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Optional:

- Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

- Faster Intel® Xeon® Scalable Processors with DDR4 2666MHz memory

SuperNova Engine™ V100

448 TFLOPS of Tensor Performance

56 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Super-fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

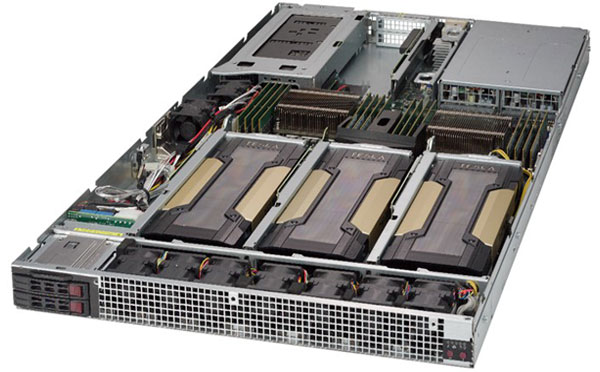

CNVAIS1-V100V1

- 19" 1U Rackmount Chassis 4-way GPGPU

- Intel® Xeon® Processor E5-2697A v4

- 256 GB DDR4 2400MHz Memory

- 4 x NVIDIA Tesla V100 16GB HBM2 at 900 GB/s Memory

- 20,480 Total CUDA® Cores

- 2,560 Total Tensor Cores

- 4 x 512GB SSD Disks

(2 hot-swap and 2 fixed) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

- Faster Intel® Xeon® Scalable Processors with DDR4 2666MHz memory

SuperNova Engine™ NP104

84.8 TFLOPS of FP16 Performance

42.4 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Super-fast Neural Network Training

Good Inferencing

NOT AVAILABLE

CNVAIS1-NP104V1

- 19" 1U Rackmount Chassis 4-way SXM2

- Intel® Xeon® Processor E5-2697A v4

- 512 GB DDR4 2400MHz Memory

- 4 x NVIDIA P100 SXM2 with NVLink 16GB HBM2 at 732 GB/s Memory

- Up to 80 GB/s GPU-to-GPU NVLINK

- 14,336 Total CUDA® Cores

- 4 x 512GB SSD Disks

(2 hot-swap and 2 fixed) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Optional:

- Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

- Faster Intel® Xeon® Scalable Processors with DDR4 2666MHz memory

- One additional NVIDIA® Tesla P40 for super-fast Inferencing

SuperNova Engine™ NV104

500 TFLOPS of Tensor Performance

62.8 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Super-fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

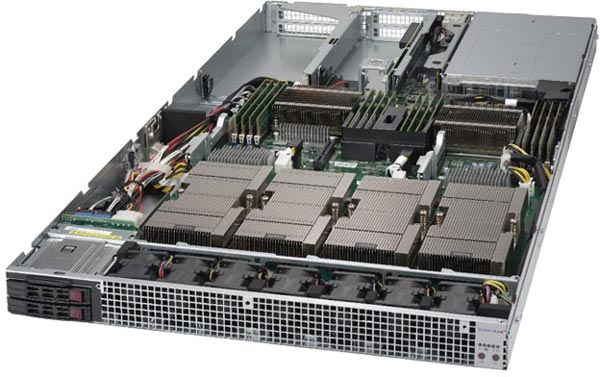

CNVAIS1-NV104V1

- 19" 1U Rackmount Chassis 4-way SXM2

- Intel® Xeon® Processor E5-2697A v4

- 512 GB DDR4 2400MHz Memory

- 4 x NVIDIA V100 SXM2 with NVLink 16GB HBM2 at 900 GB/s Memory

- Up to 300GB/s GPU-to-GPU NVLINK

- 20,480 Total CUDA® Cores

- 2,560 Total Tensor Cores

- 4 x 512GB SSD Disks

(2 hot-swap and 2 fixed) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

- Faster Intel® Xeon® Scalable Processors with DDR4 2666MHz memory

- One additional NVIDIA® Tesla P40 for super-fast Inferencing

SuperNova Engine™ NP108

170 TFLOPS of FP16 Performance

84.8 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Ultra-fast Neural Network Training

Good Inferencing

NOT AVAILABLE

CNVAIS1-NP108V1

- 19" 4U Rackmount Chassis 8-way SXM2

- Intel® Xeon® Processor E5-2697A v4

- 512 GB DDR4 2400MHz Memory

- 8 x NVIDIA P100 SXM2 with NVLink 16GB HBM2 at 732 GB/s Memory

- Up to 80 GB/s GPU-to-GPU NVLINK

- 28,672 Total CUDA® Cores

- 4 x 512GB SSD Disks

(4 hot-swap) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Optional:

- Up to 4 x Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

SuperNova Engine™ NV108

1000 TFLOPS of Tensor Performance

125.6 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Ultra-fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVAIS1-NV108V1

- 19" 4U Rackmount Chassis 8-way SXM2

- Intel® Xeon® Processor E5-2697A v4

- 512 GB DDR4 2400MHz Memory

- 8 x NVIDIA V100 SXM2 with NVLink 16GB HBM2 at 900 GB/s Memory

- Up to 300GB/s GPU-to-GPU NVLINK

- 40,960 Total CUDA® Cores

- 5,120 Total Tensor Cores

- 4 x 512GB SSD Disks

(4 hot-swap) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Up to 4 x Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

SuperNova Engine™ P40

48 TFLOPS of FP32 Performance

188 TOPS of INT8 Performance

Best suited for:

Deep Learning Development

Good Neural Network Training

Super-fast Inferencing

NOT AVAILABLE

CNVAIS1-P40V1

- 19" 1U Rackmount Chassis 4-way GPGPU

- Intel® Xeon® Processor E5-2697A v4

- 256 GB DDR4 2400MHz Memory

- 4 x NVIDIA Tesla P40 24GB Memory

- 15,360 Total CUDA® Cores

- 4 x 512GB SSD Disks

(2 hot-swap and 2 fixed) - Ubuntu 14.04 / 16.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach

- 100k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

- Mellanox EDR 100Gb/s or FDR 56Gb/s Infiniband for GPU Direct RDMA

- Faster Intel® Xeon® Scalable Processors with DDR4 2666MHz memory

Get more information!

Our experts can answer all your questions. Please reach us via mail or phone.

TÜRKÇE

TÜRKÇE ENGLISH

ENGLISH  DEUTSCH

DEUTSCH