- +90 216 455-1865

- info@compecta.com

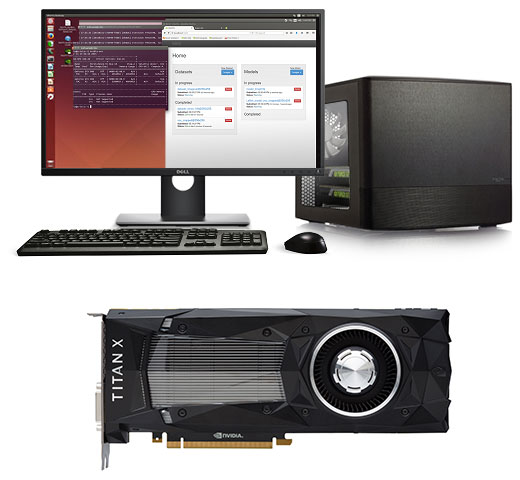

All-In-One & Ready to Use

CompecTA Nova Engine Mini™ is an all-in-one, compact, cool and quiet deskside solution equipped with 2 GPUs for Deep Learning & AI needs.

Built with the world’s best hardware, software, and systems engineering for deep learning & AI in a powerful solution in compact form factor.

It comes with Deep Learning Neural Network library, Deep Learning Frameworks, Operating System and Drivers pre-installed and ready to use.

Enterprise Level Support

CompecTA Nova Engine Mini™ comes with an Enterprise Level Support package from HPC experts with over 20 years of experience in the field.

We will assist you with any problem you may encounter with your system.

Free CPU Time

CompecTA Nova Engine Mini™ comes with 50 thousands hours of CPU Time on CompecTA's in-house HPC Cluster service called FeynmanGrid™.

Use this opportunity to gain experience on using HPC Clusters or performance test any application or to test some new code/application.

CompecTA Nova Mini™ Series

Mini Deep Learning Developer Box with NVIDIA DIGITS™

Nova Engine Mini™ R49 NEW

1.32 petaFLOPS Tensor Performance

83 TFLOPS of FP16 Performance

83 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Super-Fast Neural Network Training

Super-Fast Inferencing

REQUEST A QUOTE

CNVMDB3-R49V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- INTEL / AMD up to 64-Core CPU

- 64 GB DDR4 3000MHz Memory (up to 256GB)

- 1 x NVIDIA GeForce RTX 4090 384-bit 24GB GDDR6X (Ada Lovelace)

- 16,384 total CUDA® Cores

- 512 total 4th Gen Tensor Cores

- 8TB SATA 6Gb 3.5” Enterprise Hard Drive

- 1TB PCIe M.2 NVMe SSD for OS

- 1200W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- Anaconda Data Science Platform

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ R39 POPULAR

570 TFLOPS Tensor Performance

70 TFLOPS of FP16 Performance

70 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Fast Neural Network Training

Fast Inferencing

prices starting from

$7,995 (Excluding Taxes)

CNVMDB3-R39V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- INTEL / AMD up to 64-Core CPU

- 64 GB DDR4 3000MHz Memory (up to 256GB)

- 2 x NVIDIA GeForce RTX 3090 384-bit 24GB GDDR6X (Ampere)

- 20,992 total CUDA® Cores

- 656 total 3rd Gen Tensor Cores

- 8TB SATA 6Gb 3.5” Enterprise Hard Drive

- 1TB PCIe M.2 NVMe SSD for OS

- 1200W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- Anaconda Data Science Platform

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ RTX6 Ada NEW

To Be Announced

To Be Announced

To Be Announced

Best suited for:

Deep Learning Development

Super-Fast Neural Network Training

Super-Fast Inferencing

REQUEST A QUOTE

CNVMDB3-RTX6V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- INTEL / AMD up to 64-Core CPU

- 128 GB DDR4 3000MHz Memory (up to 256GB)

- 2 x NVIDIA RTX 6000 384-bit 48GB GDDR6 with ECC (Ada Lovelace)

- 36,352 total CUDA® Cores

- 1,136 total 4th Gen Tensor Cores

- 8TB SATA 6Gb 3.5” Enterprise Hard Drive

- 1TB PCIe M.2 NVMe SSD for OS

- 1200W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- Anaconda Data Science Platform

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ RA6

619.4 TFLOPS Tensor Performance

77.42 TFLOPS of FP16 Performance

77.42 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Fast Neural Network Training

Fast Inferencing

prices starting from

$14,475 (Excluding Taxes)

CNVMDB3-RA6V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- INTEL / AMD up to 64-Core CPU

- 128 GB DDR4 3000MHz Memory (up to 256GB)

- 2 x NVIDIA RTX A6000 384-bit 48GB GDDR6 with ECC (Ampere)

- 21,504 total CUDA® Cores

- 672 total 3rd Gen Tensor Cores

- 8TB SATA 6Gb 3.5” Enterprise Hard Drive

- 1TB PCIe M.2 NVMe SSD for OS

- 1200W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu Linux 20.04 / 22.04 LTS

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- Anaconda Data Science Platform

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

CompecTA Nova Mini™ Series Archived Products

Following products are no longer available to order.

Nova Engine Mini™ R80Ti

215.2 TFLOPS DL Performance

53.8 TFLOPS of FP16 Performance

26.9 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVMDB2-R80TIV1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA GeForce RTX 2080Ti 384-bit 11GB GDDR6

- 8,704 total CUDA® Cores

- 1,088 total Tensor Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ TiR

261 TFLOPS DL Performance

65.2 TFLOPS of FP16 Performance

22.6 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVMDB2-TIRV1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA Titan RTX 384-bit 24GB GDDR6

- 9,216 total CUDA® Cores

- 1,152 total Tensor Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ GV100

237 TFLOPS DL Performance

59.2 TFLOPS of FP16 Performance

29.6 TFLOPS of FP32 Performance

14.8 TFLOPS of FP64 Performance

Best suited for:

Deep Learning Development

Machine Learning

Super-Fast Neural Network Training

Super-Fast Inferencing

NOT AVAILABLE

CNVMDB2-GV100V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA Quadro GV100 4096-bit 32GB HBM2

- 10,240 total CUDA® Cores

- 1,280 total Tensor Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA-X

- NVIDIA® cuDNN™

- RAPIDS, Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ QRTX8

261 TFLOPS DL Performance

412.2 TOPS of INT8 Performance

65.2 TFLOPS of FP16 Performance

32.6 TFLOPS of FP32 Performance

Best suited for:

Deep Learning Development

Machine Learning

Super-Fast Neural Network Training

Super-Fast Inferencing

NOT AVAILABLE

CNVMDB2-QR8V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA Quadro RTX 8000 384-bit 48GB GDDR6

- 9,216 total CUDA® Cores

- 1,152 total Tensor Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA-X

- NVIDIA® cuDNN™

- RAPIDS, Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ TiV

220 TFLOPS of DL Performance

27.6 TFLOPS of FP32 Performance

13.8 TFLOPS of FP64 Performance

Best suited for:

Deep Learning Development

Super-Fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVMDB2-TIVV1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA Titan V Volta 3072-bit 12GB HBM2

- 10,240 total CUDA® Cores

- 1,280 total Tensor Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ TiXp

24.2 TFLOPS of FP32 Performance

94 TOPS of INT8 Performance

Best suited for:

Deep Learning Development

Good Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVMDB2-TXPV2

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA TITAN Xp Pascal 384-bit 12GB GDDR5X

- 7,680 total CUDA® Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ G80Ti

22.6 TFLOPS of FP32 Performance

90 TOPS of INT8 Performance

Best suited for:

Deep Learning Development

Good Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVMDB2-G80TIV1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA GeForce GTX 1080Ti 384-bit 11GB GDDR5X

- 7,168 total CUDA® Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Nova Engine Mini™ GP100

41.4 TFLOPS of FP16 Performance

20.6 TFLOPS of FP32 Performance

10.4 TFLOPS of FP64 Performance

Best suited for:

Deep Learning Development

Fast Neural Network Training

Fast Inferencing

NOT AVAILABLE

CNVMDB2-GP100V1

- Dell 24" Full HD IPS Monitor

- Workstation Class Motherboard

- Intel Core i9-9900K, 3.60 GHz, 8-Core CPU

- 64 GB DDR4 2666MHz Memory (up to 128GB)

- 2 x NVIDIA Quadro GP100 4096-bit 16GB HBM2

- 7,168 total CUDA® Cores

- 4TB SATA 6Gb 3.5” Enterprise Hard Drive

- 500GB PCI-e M.2 SSD for OS

- 850W Power Supply

- Logitech Wireless Keyboard and Mouse

- Ubuntu 16.04 / 18.04

- NVIDIA-qualified driver

- NVIDIA® DIGITS™

- NVIDIA® CUDA® Toolkit

- NVIDIA® cuDNN™

- Caffe, Theano, Torch, BIDMach, Tensorflow

- 50k CPU Time on FeynmanGrid™

- Enterprise Level Support from CompecTA® HPC Professional Services

Get more information!

Our experts can answer all your questions. Please reach us via mail or phone.

TÜRKÇE

TÜRKÇE ENGLISH

ENGLISH  DEUTSCH

DEUTSCH