- +90 216 455-1865

- info@compecta.com

AI DATA CENTER IN A BOX

Data science teams are at the leading edge of innovation, but they’re often left searching for available AI compute cycles to complete projects. They need a dedicated resource that can plug in anywhere and provide maximum performance for multiple, simultaneous users anywhere in the world. NVIDIA DGX Station™ A100 brings AI supercomputing to data science teams, offering data center technology without a data center or additional IT infrastructure. Powerful performance, a fully optimized software stack, and direct access to NVIDIA DGXperts ensure faster time to insights.

Data Center Performance Anywhere

AI Supercomputing for Data Science Teams

With DGX Station A100, organizations can provide multiple users with a centralized AI resource for all workloads—training, inference, data analytics—that delivers an immediate on-ramp to NVIDIA DGX™-based infrastructure and works alongside other NVIDIA-Certified Systems. And with Multi-Instance GPU (MIG), it’s possible to allocate up to 28 separate GPU devices to individual users and jobs.

Data Center Performance without the Data Center

DGX Station A100 is a server-grade AI system that doesn’t require data center power and cooling. It includes four NVIDIA A100 Tensor Core GPUs, a top-of-the-line, server-grade CPU, super-fast NVMe storage, and leading-edge PCIe Gen4 buses, along with remote management so you can manage it like a server.

An AI Appliance You Can Place Anywhere

Designed for today's agile data science teams working in corporate offices, labs, research facilities, or even from home, DGX Station A100 requires no complicated installation or significant IT infrastructure. Simply plug it into any standard wall outlet to get up and running in minutes and work from anywhere.

Bigger Models, Faster Answers

NVIDIA DGX Station A100 is the world’s only office-friendly system with four fully interconnected and MIG-capable NVIDIA A100 GPUs, leveraging NVIDIA® NVLink® for running parallel jobs and multiple users without impacting system performance. Train large models using a fully GPU-optimized software stack and up to 320 gigabytes (GB) of GPU memory.

Iterate and Innovate Faster

High-performance training accelerates your productivity, which means faster time to insight and faster time to market.

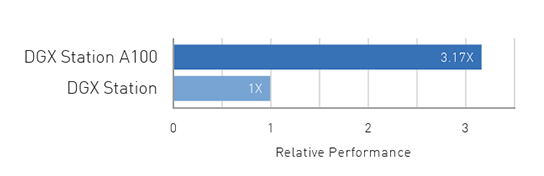

TRAINING

BERT Large Pre-Training Phase 1

Over 3X Faster

DGX Station A100 320GB; Batch Size=64; Mixed Precision; With AMP; Real Data; Sequence Length=128

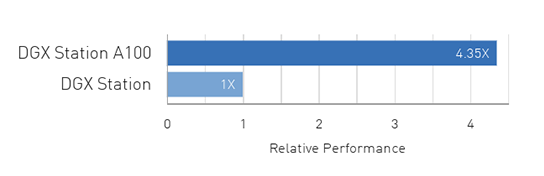

INFERENCE

BERT Large Inference

Over 4X Faster

DGX Station A100 320GB; Batch Size=256; INT8 Precision; Synthetic Data; Sequence Length=128, cuDNN 8.0.4

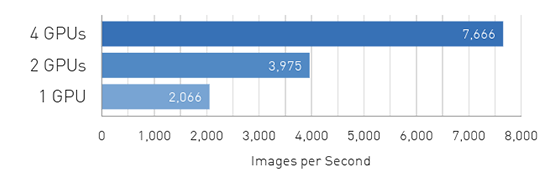

MULTI-GPU SCALABILITY

ResNet-50 V1.5 Training

Linear Scalability

DGX Station A100 320GB; Batch Size=192; Mixed Precision; Real Data; cuDNN Version=8.0.4; NCCL Version=2.7.8; NGC MXNet 20.10 Container

AI Training

Deep learning datasets are becoming larger and more complex, with workloads like conversational AI, recommender systems, and computer vision becoming increasingly prevalent across industries. NVIDIA DGX Station A100, which comes with an integrated software stack, is designed to deliver the fastest time to solution on complex AI models compared with PCIe-based workstations.

AI Inference

Typically, inference workloads are deployed in the data center, as they utilize every compute resource available and require an agile, elastic infrastructure that can scale out. NVIDIA DGX Station A100 is perfectly suited for testing inference performance and results locally before deploying in the data center, thanks to integrated technologies like MIG that accelerate inference workloads and provide the highest throughput and real-time responsiveness needed to bring AI applications to life.

Data Analytics

Every day, businesses are generating and collecting unprecedented amounts of data. This massive amount of information represents a missed opportunity for those not using GPU-accelerated analytics. The more data you have, the more you can learn. With NVIDIA DGX Station A100, data science teams can derive actionable insights from their data faster than ever before.

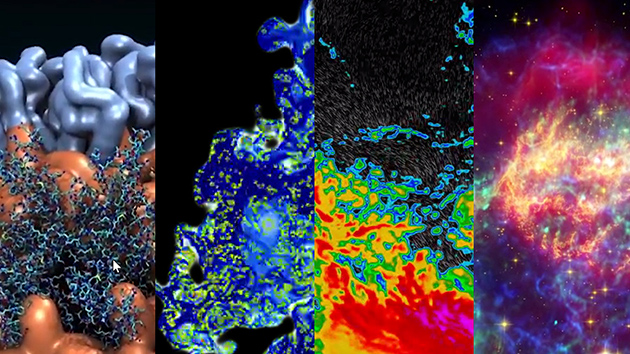

High-Performance Computing

High-performance computing (HPC) is one of the most essential tools fueling the advancement of science. Optimizing over 700 applications across a broad range of domains, NVIDIA GPUs are the engine of the modern HPC data center. Equipped with four NVIDIA A100 Tensor Core GPUs, DGX Station A100 is the perfect system for developers to test out scientific workloads before deploying on their HPC clusters, enabling them to deliver breakthrough performance at the office or even from home.

Order your NVIDIA DGX Station A100 Today

AI Supercomputer for data science teams powered by four of the world’s fastest AI accelerators and 320GB RAM. Data center performance without the data center. Install it almost anywhere!

NVIDIA Academic discounts available. Please contact for eligibility requirements and legal terms and conditions.

Get more information!

Our experts can answer all your questions. Please reach us via mail or phone.

TÜRKÇE

TÜRKÇE ENGLISH

ENGLISH  DEUTSCH

DEUTSCH